Speech Synthesis & Speech Recognition

Using SAPI 4 Low Level Interfaces

Click here to download the files associated with this article.

If you find this article useful then please consider making a donation. It will be appreciated however big or small it might be and will encourage Brian to continue researching and writing about interesting subjects in the future.

This article looks at adding support for speech capabilities to Microsoft Windows applications written in Delphi, using the Microsoft Speech API version 4 (SAPI 4). For an overview on the subject of speech technology please click here. For information on using SAPI 5.1 in Delphi applications click here.

The older SAPI 4 interfaces are defined in two ways. There are high level interfaces, intended to make implementation easier, but which sacrifice some of the control. These are intended for quick results but can be quite effective. There are also low level interfaces, which give full control but involve more work to get going. These are intended for the serious programmer to work with.

The high level interfaces are implemented by Microsoft in COM objects to call the lower level interfaces, taking care of all the nitty-gritty. The low level interfaces themselves are implemented by the TTS and SR engines that you obtain and install.

We will look at the low level interfaces available for TTS and SR in this article. You can find coverage of the high level interfaces by clicking here.

Part of the process of speech recognition involves deciding what words have actually been spoken. Recognisers use a grammar to decide what has been said, where possible.

In the case of dictation, a grammar can be used to indicate some words that are likely to be spoken. It is not feasible to try and represent the entire spoken English language as a grammar, so the recogniser does its best and uses the grammar to help out. The recogniser tries to use context information from the text to work out which words are more likely than others. At its simplest, the Microsoft SR engine can use a dictation grammar like this:

|

With Command and Control, the permitted words are limited to the supported commands. The grammar defines various rules that dictate what will be said and this makes the recogniser's job much easier. Rather than trying to understand anything spoken, it only needs to recognise speech that follows the supplied rules. A Command and Control grammar is typically referred to as Context-Free Grammar (CFG). A simple CFG that recognises three colours might look like this:

|

Note: Start is the root point of the grammar.

Grammars support lists to make implementing many similar commands easy. For example:

|

You can find more details about the supported grammar syntax in the SAPI documentation

The low level interfaces are implemented by the TTS and SR engines installed on your machine. These interfaces are made available as true COM interfaces and also through ActiveX controls. There are more details to worry about with these low-level interfaces, and it is recommended that you have the SAPI 4 documentation to hand to help follow what's going on.

The low level COM APIs are described as the DirectTextToSpeech API and the DirectSpeechRecognition API. These are implemented in speech.dll in the Windows speech directory, described simply as Microsoft Speech in the version information. The pertinent interfaces are all defined in the speech.pas SAPI 4 import unit.

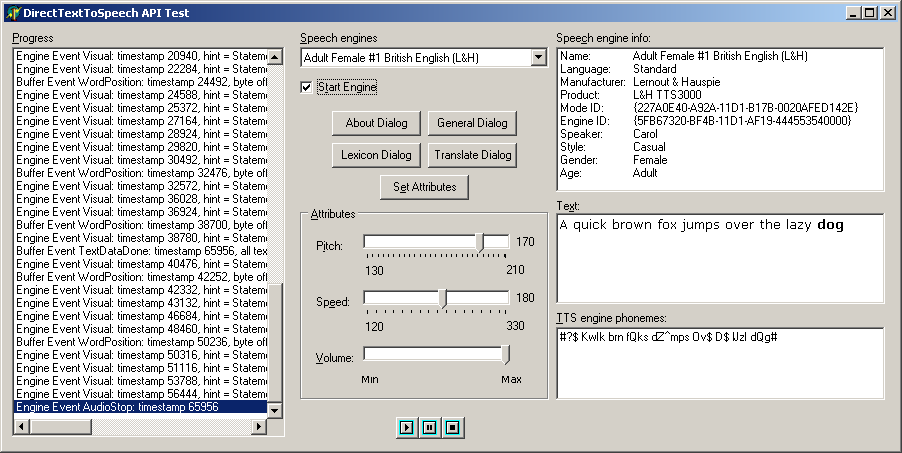

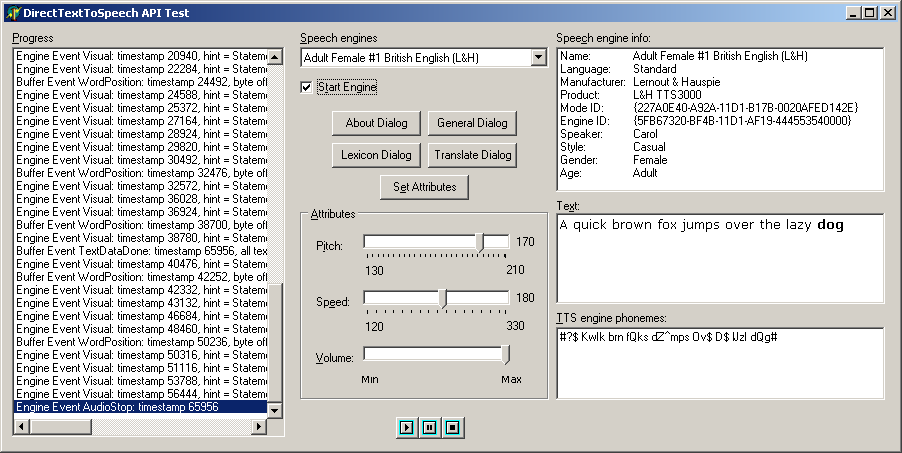

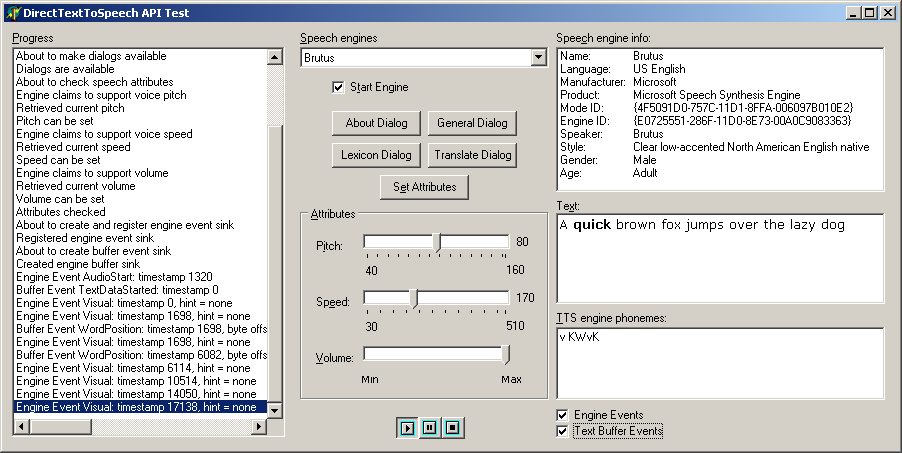

The code listed in this section comes from the sample project DirectTextToSpeech.dpr in the COM directory. The program looks like this when running:

The following sections describe the important COM objects you work with and how the sample program uses them.

The first thing you need to do is initialise an audio destination object that will be used by the speech engine object. To make sure things are on the right track the default Wave Mapper is selected as the device to work with.

|

A TTS engine typically offers various modes (different types of voices) and the enumerator can loop through them all. A TTS enumerator implements the ITTSEnum interface to allow all modes of all available engines to be listed. You can also use the ITTSFind interface to locate a specific mode by specifying preferred attributes (such as gender and age). Each mode is represented by a TTTSModeInfo record structure.

This sample application lists all supported modes in a combobox and lets the user select a mode to use. The combobox Items property stores the textual mode names in its Strings array and pointers to the corresponding mode records in the Objects array. As different modes are selected a listbox is used to display the mode attributes stored in the mode record. This way, the user can make an informed decision about which voice mode to use.

|

Once a voice mode has been chosen, it can be identified by its mode identifier (the GUID stored in the gModeID field of the TTTSModeInfo record). This can be stored with your application data in order to remember which voice was being used last. The Voice Text API also supports mode identifiers; the IVTxtAttributes.TTSModeSet method takes such a GUID.

Once a voice has been chosen it is selected by calling ITTSEnum.Select or ITTSFind.Select and an engine object is created. The Select method takes the desired mode identifier and an interface to an audio destination object and returns the ITTSCentral interface of the engine object in an out parameter.

|

The engine object also (probably) implements the ITTSDialogs interface, which allows access to the standard TTS engine dialogs and the ITTSAttributes interface, which allows you to customise the voice attributes. You can either use the Supports function to see if these interfaces are supported, or check the TTTSModeInfo.dwInterfaces mask for the TTSI_ITTSATTRIBUTES or TTSI_ITTSDIALOGS flags.

This code gets access to both interfaces and checks the current voice pitch, speed and volume (these details are displayed in track bars on the form).

|

As with the Voice Text API there are different calls to start speech and to continue paused speech, so the same approach of using a helper flag has been employed. The text to speak is taken from a richedit control. You can see that a TSData record is necessary to represent the text to be added to the speech queue.

Note: text passed to a TTS engine can include textual tags to add in things such as emphasis, pitch changes and bookmarks. You tell the TTS engine that you have tagged text by passing TTSDATAFLAG_TAGGED as the second argument to TextData.

The DirectTextToSpeech API supports two notification interfaces. One is set up each time you call TextData (we'll come back to this one) and the other is set up just once.

The notification interface that requires a one-off setup is ITTSNotifySink. Once you have created an object that implements this interface you must register it with the TTS engine.

|

You can also unregister it by passing the returned cookie to the UnRegister method. The notifications supported are much the same as with the Voice Text API and so again, the engine phonemes that represent the text to be spoken are listed in a separate memo.

As text is added to the speech buffer (when you call TextData) a reference to an object that implements the buffer notification interface (ITTSBufNotifySink) is passed along, as you can see in the call listed earlier. This object is optionally created through one of the check boxes on the form and logs details of buffer notification methods, which are:

The WordPosition notification is useful in that since we are displaying the spoken text as engine phonemes we know where to insert spaces. Additionally we can use this information to emphasise in the UI what text is being spoken. As each word starts being processed to be spoken, the code emboldens the corresponding word in the richedit control (and restores the previous word to normal, if appropriate). This gives a nice effect:

|

Using the DirectSpeechRecognition API you can achieve Command and Control as well as Dictation Speech Recognition depending on the grammar that you supply. We didn't have to worry about this before as the high level objects set up a default grammar (although you can change it).

For Command and Control you need a Context Free Grammar (CFG) and for Dictation you need a Dictation Grammar. We'll see simple examples of these as we proceed and you can find more information in the SAPI 4 documentation.

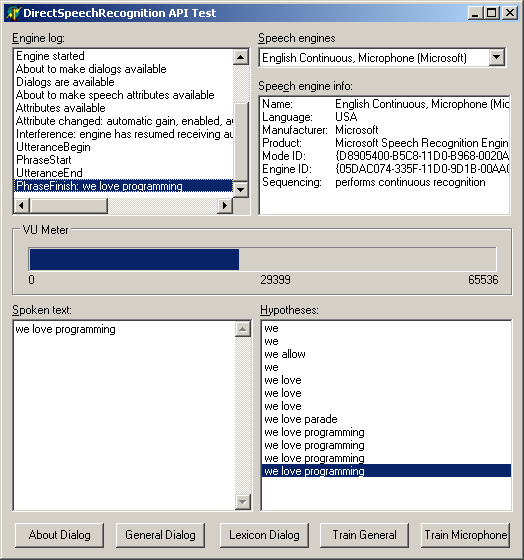

A sample project using the DirectSpeechRecognition API can be found as DirectSpeechRecognitionAPI.dpr in the COM directory. The program looks like this when running:

The following sections describe the important COM objects you work with and how the sample program uses them.

The first thing you need to do is initialise an audio destination object that will be used by the speech engine object, telling it which audio device to use.

|

An SR engine typically offers different speech recognition modes (such as one for microphone and one for telephone) and the enumerator can loop through them all. An SR enumerator implements the ISREnum interface to allow all modes of all available engines to be listed. You can also use the ISRFind interface to locate a specific mode by specifying preferred attributes. Each mode is represented by a TSRModeInfo record structure.

This sample application lists all supported modes in a combobox and lets the user browse the available modes, but automatically selects the first mode to use. The combobox Items property stores the textual mode names in the Strings array and pointers to the corresponding mode records in the Objects array. As different modes are selected a listbox is used to display the mode attributes stored in the mode record. This way, the user can see the attributes of the various modes on offer.

|

A voice mode can be identified by its mode identifier (the GUID stored in the gModeID field of the TSRModeInfo record). This can be stored with your application data in order to remember which voice was being used last.

A voice mode is selected by calling ISREnum.Select or ISRFind.Select and these methods both create an engine object. The Select method takes the desired mode identifier and an interface to an audio destination object and returns the ISRCentral interface of the engine object in an out parameter.

|

The engine object also (probably) implements the ISRDialogs interface, which allows access to the standard SR engine dialogs and the ISRAttributes interface, which allows you to check on the SR attributes. You can either use the Supports function to see if these interfaces are supported, or check the TSRModeInfo.dwInterfaces mask for the SRI_ISRATTRIBUTES or SRI_ISRDIALOGS flags.

|

Next a grammar compiler object is used to take a grammar definition (a simple dictation grammar) and compile it. This compiled grammar will be passed along to the SR engine shortly. Some brief information about grammars is given towards the start of this article and you can find more information in the SAPI documentation.

|

The grammar compiler can generate messages indicating if anything was wrong with the grammar (or just that the grammar compiled successfully). If you wish to see the error message you can change the code to:

|

The grammar compiler can now load the compiled grammar into the engine object and, whilst doing so can set up a notification object that receives recognition-related notifications (from the ISRGramNotifySink interface). These include the PhraseStart, PhraseHypothesis and PhraseFinish notifications (among others) that we saw in the high level Voice Dictation API.

|

Another notification interface is also supported (ISRNotifySink) to inform the application of status changes. This interface has a notification sink object set up to receive the notifications, which are logged to a listbox on the form, like most of the other notifications in the program.

|

The final job is to set up the speaker whose voice recognition profile you wish to use (and whose profile will be modified if more SR training takes place). Again, this example hard codes a specific speaker profile - in real applications you may prefer to store this with your application data.

|

The ActiveX controls wrap up the DirectTextToSpeech and DirectSpeechRecognition APIs and are described as ActiveVoice (or the Direct Speech Synthesis Control) and ActiveListen (or the Direct Speech Recognition Control).

Ready made packages for Delphi 5 and Delphi 6 containing the ActiveX units can be found in appropriately named subdirectories under SAPI 4 in the accompanying files.

The Microsoft Direct TextToSpeech control (or Direct Text-to-Speech control as its type library describes it) is an ActiveX that wraps up the low level DirectTextToSpeech API. To use it you must first import the ActiveX into Delphi; you will find it described as Microsoft Direct Text-to-Speech (Version 1.0).

This will generate and install a type library import unit called ACTIVEVOICEPROJECTLib_TLB.pas. The import unit contains the ActiveX component wrapper class called TDirectSS.

The ActiveX is implemented in XVoice.dll in the Windows speech directory (whose version information describes it as the DirectSpeechSynthesis Module) and the primary interface implemented is IDirectSS.

The control surfaces various DirectTextToSpeech interfaces such as ITTSCentral, ITTSAttributes, ITTSDialogs and ITTSFind. This means that the control exposes methods and properties to speak, get and set the pitch, speed and volume of the speech, invoke the engine dialogs, identify if the engine is currently speaking and locate engines.

It also sends all the notifications from the ITTSNotifySink, ITTSNotifySink2 and ITTSBufNotifySink interfaces through ActiveX events.

You can programmatically work with this ActiveX control using the ProgID ActiveVoice.ActiveVoice or the ClassID CLASS_DirectSS from the ACTIVEVOICEPROJECTLib_TLB unit. The Windows registry describes this class as the ActiveVoice Class.

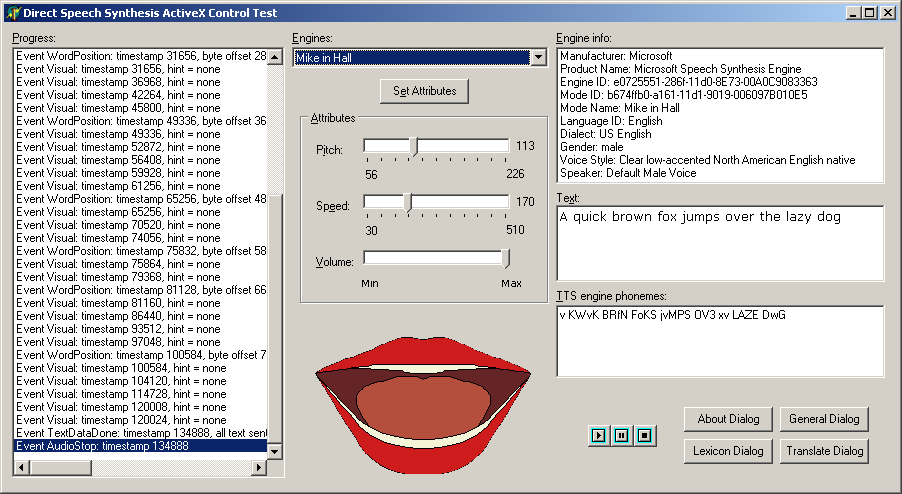

Alternatively (and more typically) you can simply drop the ActiveX component on a form as is done by the sample project DirectSSControl.dpr in the ActiveX directory. The project does much the same as the DirectTextToSpeech API project, but the ActiveX control provides an animated mouth that shows on the form.

Much of the work involved in the DirectTextToSpeech API version is much simplified by the ActiveX wrapping up some of the niggly details. In this project the engine mode is changed as you choose different items in the listbox since it is so straightforward.

The control manages a list of all modes (the number is given by the CountEngines property and the active one is in CurrentMode). When the combobox is populated the Strings property of the TStrings property Items is filled with the descriptive mode names, whereas the Objects property is simply filled with the mode index.

|

The Microsoft Direct Speech Recognition control is an ActiveX that wraps up the low level DirectTextToSpeech API. To use it you must first import the ActiveX into Delphi; you will find it described as Microsoft Direct Speech Recognition (Version 1.0).

This will generate and install a type library import unit called ACTIVELISTENPROJECTLib_TLB.pas. The import unit contains the ActiveX component wrapper class called TDirectSS.

The ActiveX is implemented in xlisten.dll in the Windows speech directory (whose version information describes it as the DirectSpeechRecognition Module) and the primary interface implemented is IDirectSR.

You can programmatically work with this ActiveX control using the ProgID ActiveListen.ActiveListen or the ClassID CLASS_DirectSR from the ACTIVELISTENPROJECTLib_TLB unit. The Windows registry describes this class as the ActiveListen Class.

Alternatively (and more typically) you can simply drop the ActiveX component on a form. That is what has been done with the sample project DirectSpeechRecognitionControl.dpr in the ActiveX directory. This project does much the same as the DirectSpeechRecognitionAPI.dpr project and involves very much the same type of code.

Note: The OnPhraseHypothesis event does not fire with this control (though OnPhraseStart does).

If you get issues of SR stopping (or not starting) unexpectedly, or other weird SR issues, check your recording settings have the microphone enabled.

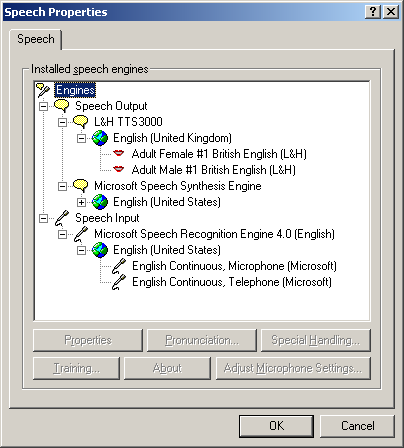

When distributing SAPI 4 applications you will need to supply the redistributable components (available as spchapi.exe from http://www.microsoft.com/speech/download/old). It would be advisable to also deploy the Speech Control Panel application (available as spchcpl.exe from http://www.microsoft.com/msagent/downloads.htm), however this Control Panel applet will not install on any version of Windows later than Windows 2000.

The Microsoft SAPI 4 compliant TTS engine can be downloaded from various sites (although not Microsoft's), such as http://misterhouse.net:81/public/speech/ or http://stono.cs.cofc.edu/~manaris/SUITEKeys/.

As well as the Microsoft TTS engine, you can also download additional TTS engines from Lernout & Hauspie (which include one that uses a British English voice) from http://www.microsoft.com/msagent/downloads.htm. Note that if you plan to use any of these engines from applications running under user accounts without user privileges, you need to do some registry tweaking, described in http://www.microsoft.com/msagent/detail/tts3000deploy.htm.

You can download the Microsoft Speech Recognition engine for use with SAPI 4 from http://www.microsoft.com/msagent/downloads.htm.

The following is a list of useful articles and papers that I found on SAPI 4 development during my research on this subject.

Brian Long used to work at Borland UK, performing a number of duties including Technical Support on all the programming tools. Since leaving in 1995, Brian has spent the intervening years as a trainer, trouble-shooter and mentor focusing on the use of the C#, Delphi and C++ languages, and of the Win32 and .NET platforms. In his spare time Brian actively researches and employs strategies for the convenient identification, isolation and removal of malware. If you need training in these areas or need solutions to problems you have with them, please get in touch or visit Brian's Web site.

Brian authored a Borland Pascal problem-solving book in 1994 and occasionally acts as a Technical Editor for Wiley (previously Sybex); he was the Technical Editor for Mastering Delphi 7 and Mastering Delphi 2005 and also contributed a chapter to Delphi for .NET Developer Guide. Brian is a regular columnist in The Delphi Magazine and has had numerous articles published in Developer's Review, Computing, Delphi Developer's Journal and EXE Magazine. He was nominated for the Spirit of Delphi award in 2000.

Go to the speech capabilities overview

Go back to the top of this SAPI 4 Low Level Interfaces article

Go to the SAPI 4 High Level Interfaces

article